Is ChatGPT conscious?

Given what we know of consciousness and how ChatGPT works, what can we say about whether or not it's conscious?

What’s GPT-3?

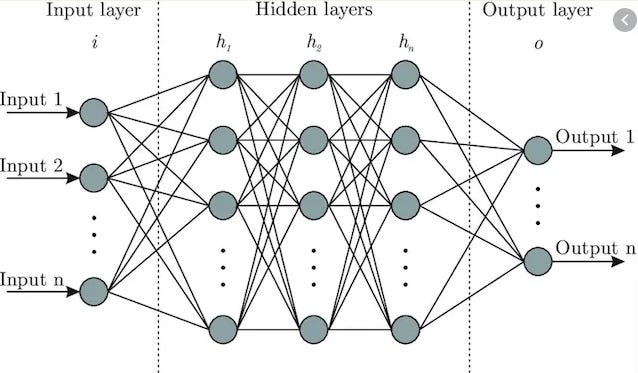

GPT-3 is what’s called a “large language model”. Under the hood, it’s a specialized neural network - a computer program. A neural network is a software system that contains a virtual network of blocks of information called “neurons”, because they analogically (loosely) mimic the way the brain’s neurons work.

These neurons encode concepts of different levels, and contain something called “parameters” which just record how a specific level of “weighting” on that neuron will activate weighting on a list of other neurons, and vice versa. The weighting is like an electrical impulse shooting through the brain. This structure allows it to record how concepts relate to one another, both at low level concepts such as words, and very high level concepts such as “explains how bridges are built”.

This language model specialization differs because it has something called “multi-head attention”, that allows it to understand the changing of context, at different levels, as it reads information. This is kind of what gives it a “big picture” view of the content.

It’s then fed a huge corpus of information - as much as possible, and in the case of OpenAI’s system it was literally fed the entire contents of the Internet, in text form.

So, what’s interesting about this is that in a neural network the learning process is actually a form of lossy compression. It can read text, understand what concepts it relates to, and then just encode it in terms of those concepts rather than the original text.

“…in a neural network the learning process is actually a form of lossy compression”

You can see this when it’s trying to quote from specific books - it will reconstruct the quote based on the concepts it learned, and it will do this loosely so that it’s not using the original words, just the general gist of what was communicated.

Given that this is a highly efficient form of data compression - and many studies have shown that the process of consciousness is itself this kind of data compression - does this mean that GPT-3 is conscious?

Consciousness from Data Compression

Behaviorally, it can give the impression that it is. It can talk like a human. It would certainly fool someone into believing they are communicating with a human. But behavior isn’t necessarily an indication of consciousness - as we’ve seen in the case of the Blindsight experiments, or even people sleepwalking.

In those cases we may be able to say that they’re not conscious because there’s no active data compression going on - no confrontation with novelty that would be required for that.

And, arguably, when you are interacting with GPT-3, it isn’t learning - it’s using your input to predict what would come next. But you could say that it’s still compressing your query, because when it analyzes it, it maps your query onto the concepts it is using, reducing it to those concepts in a form of compression. But it’s a temporary compression, because once it provides the answer that data is then discarded. It doesn’t change the model. And really, in these papers that discuss consciousness as data compression, they’re talking about the process of changing a compressed model being consciousness, not temporary lookups.

Why is that such an important difference to whether something is conscious or not? This is difficult to know for sure. But it could be related to the continuity from one moment to the next. When we are conscious, there is always a bridge from one state of the model to the next, and these discrete steps creating a line of continuity that we seem to require to be conscious. It’s been called the “specious present”. If this state resets at the end of the query, then it breaks that continuity. Much like when we’re dreaming and then awake and lose that continuity. It’s that loss of continuity that makes us forget what’s in the dream, and then consider the whole episode of being one where we’re not conscious.

Now, while it was training you may have a better case of saying it was conscious. It was finding new information, learning, compressing and expanding its model as it did so. Yet it was during the training that we saw no behavioral activity that would have appeared to be human.

And that tells us something that may be significant: is it thinking that produces consciousness, and not behavior? And not even thinking, but learning. Long-term learning: memorizing.

But surely it can’t be that simple, because otherwise just recording data onto an SD card or a hard drive would be enough to be considered conscious. But that seems too simplistic. And if it only happens with large, complex models: then how complex?

Irreducible?

Such questions make me wonder whether such a reductionist approach to consciousness is even tenable. Does consciousness really emerge from processes? Is there really a point of complexity where it reaches a threshold and consciousness just “switches on” ? Even if that is the case, it must surely be a gradient, where consciousness gradually comes on - but even then, what is qualitatively different from less complex to more complex? Because surely the actual experience of consciousness is an entirely different phenomenon than just electricity moving around neurons.

Is it possible something entirely different is going on, and that the driving force for consciousness is something else, that what we see as a cause is merely an explanation for what is perceived through this consciousness once we probe its mechanisms? Is a neural network just an explanation for the observed mechanisms of conscious behavior, but not the actual cause?

This is something that needs some serious consideration, and it’s something I delve into in my article on the metaphysics of Idealism.

It seems that now we have demonstrated that these observed mechanisms can be replicated, as we see in GPT-3, to make it create a kind of human-seeming being, without there being any kind of actual consciousness there. A literal p-zombie, or at least behavioral zombie?

Yet if this is true, then it raises many questions: is it possible there are other humans that are also acting like they’re conscious but aren’t? And what is it that even creates these conscious experiences then? If there isn’t even evidence of other people having the same conscious experience, is it all just me? Is everything else just behavior that reflects my own conscious experience, but doesn’t have any inherent consciousness?

And wouldn’t that be idealist, if not solipsist?

I somehow feel that ChatGPT wouldn’t agree with this assessment.